On the morning of 13 January, the state of Hawaii was sent into panic by the issuing of an SMS ballistic missile warning, giving residents perhaps 10 minutes to prepare for a nuclear strike, with the helpful wording 'this is not a drill' appended to the message. Not to be outdone, three days later the Japanese national broadcaster NHK sent out a text message warning of an impending North Korean missile strike. Thankfully, both were false alarms. In the aftermath, FCC Chairman Ajit Pai warned the Hawaii Emergency Management Agency not to become the 'boy who cried wolf'.

Is it really there? Aesop's story of the Boy who Cried Wolf is perhaps 2500 years old. Longevity in folk tales is an indicator of universal significance. The story holds two lessons. The first is that telling the truth makes you more likely to be believed; if this is important to you, you should therefore tell the truth. The second is a lesson for interpreters: even an untrustworthy signal can sometimes be veridical.

These lessons find a modern interpretation within probability theory and inference. The value of a signal - a boy crying wolf, a fingerprint at a crime scene, a car alarm ringing - is measured in terms of its likelihood ratio. This is the probability of that signal being seen if a hypothesis is true - wolves are attacking the sheep, the prime suspect is guilty, your car is being broken into - divided by the probability of that signal being seen if it's false. Good evidence is that which is highly consistent with a hypothesis's truth but inconsistent with its falsity, or vice versa.

But the final probability is also driven by prior probability; the probability you'd be right to assign to the hypothesis before taking the evidence into account. How frequent are sheep attacks? How likely is a specific individual to be guilty of a crime? What's the probability of your car being broken into, right now? The final probability is the product of the prior probability and the likelihood ratio of the signal.

The last thing to take into account is the action threshold. At what probability does it make sense to act? Broadly, this is driven by the costs and benefits of different outcomes. How much effort does it take to run uphill to the sheepfold? How bad is it if a wolf eats a sheep - or a shepherd? If the cost of acting is low, compared to the cost of failing to act, the action threshold will be low; if it's relatively high, you'd need to be more certain before it made sense to act on the information.

All of this neatly explains the Boy who Cried Wolf. Assuming the villagers are used to highly reliable shepherds, the likelihood ratio for a cry of 'wolf' is going to be high. Wolf attacks might be rare - say 1% at any given time - but if the cry of 'wolf' is virtually certain (99%) in the event of an attack, and extremely unlikely (1%) otherwise, the final probability that an attack is happening, upon hearing the cry, would be 50%. Assuming the cost of ascending the hill is outweighed by the cost of losing a sheep, this is certainly enough to rouse the villagers to action.

But a couple of days - and false warnings - down the line, the villagers have learned more about the likelihood ratio of this new shepherd's alarm. Now, they might be justified in thinking the cry of wolf to have very low information indeed, and possibly indeed to make a wolf attack less likely than average. Whatever new likelihood ratio they assign to the signal, the tragic result is village-wide apathy vis-a-vis wolf defence.

As a general rule, the likelihood ratio of a signal, to induce action, and for most practical purposes, needs to be at least of a similar order of magnitude to the prior odds of the event happening. An event with a prior probability of 1-in-1000 needs a signal with a likelihood ratio of at least 1000:1 to become 50% probable. To put it in Carl Sagan's words, extraordinary claims require extraordinary evidence.

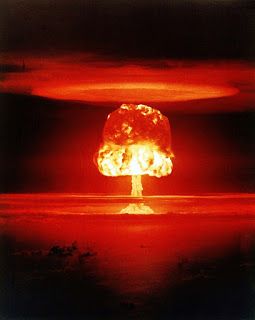

Still improbable, thankfully.

A nuclear strike on Hawaii is improbable - improbable enough for Hawaiians to spend their days going about their business instead of lurking in shelters. Whatever the likelihood ratio assigned to the alert system was before the false alarm, it was certainly enough to raise a strike's probability to a level that justified action of some sort - filling of bathtubs, taking of shelter, making a run for it.

The false alarm, however, will significantly dilute the likelihood ratio of subsequent alerts. When alerts are so infrequent, the existence of a single false alarm constitutes a sizeable portion of the evidence. If false alarms are more frequent than nuclear wars, the final probability of an impending strike will never be higher than 50% in the event of an alarm. If they're ten times more frequent - and who is to say there are not? - then the final probability can only be 10%. Where is Hawaii's action threshold? Somewhere between 10% and 100%? Who knows. Restoring trust in the system must clearly be a salient priority. A second false alarm would be a disaster.